NXAI unveils its first time series model, TiRex, based on the novel xLSTM architecture – and immediately claims the top spot in well-known international benchmark leaderboards. Despite having just 35 million parameters, TiRex is significantly smaller and more memory-efficient than its competitors. It not only excels in prediction accuracy but is also considerably faster. “We’re no longer talking about marginal improvements – TiRex delivers a substantial leap in quality over other models, both for short- and long-term forecasts,” explains Prof. Dr. Sepp Hochreiter, Chief Scientist at NXAI in Linz.

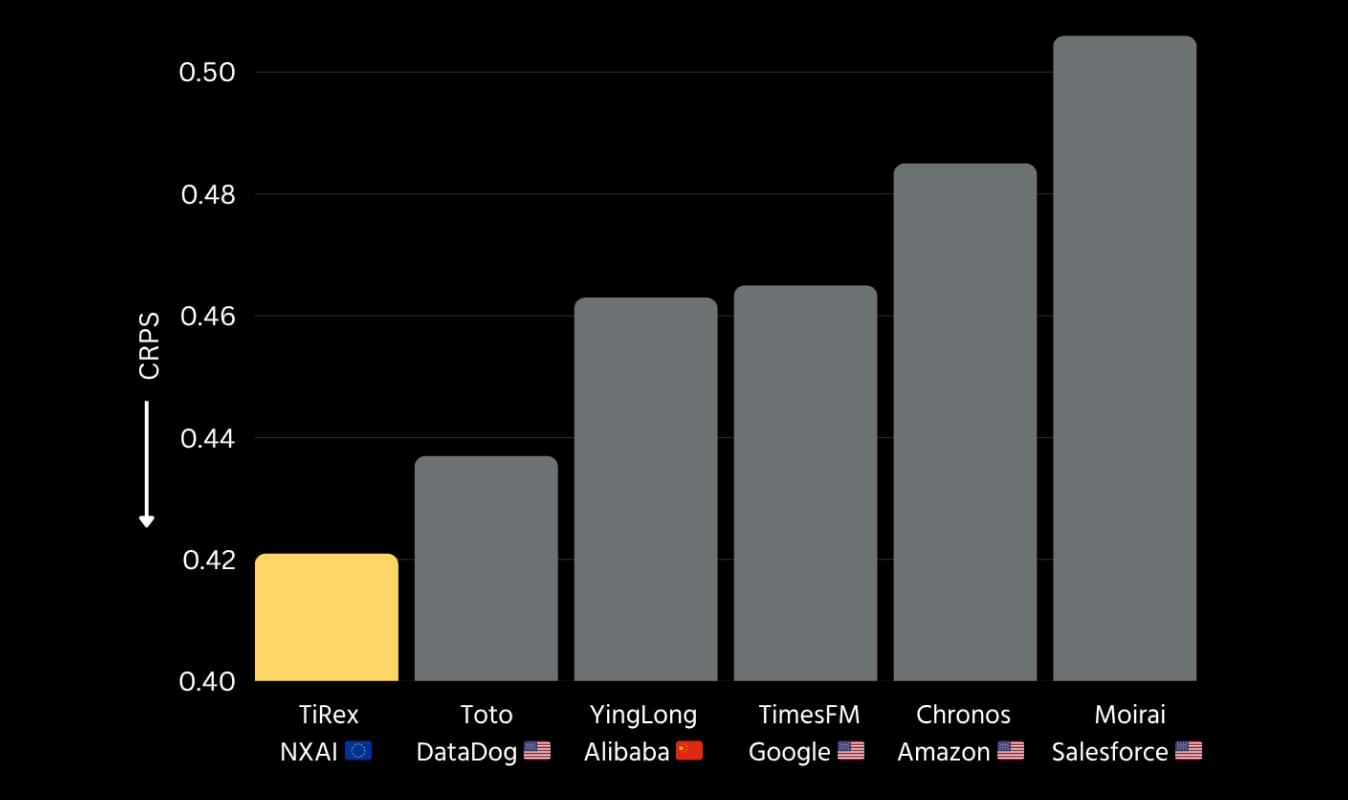

TiRex and major competitors (based on GiftEval CRPS) – Smaller is Better

While many people focus on large language models like ChatGPT, the real industrial potential lies elsewhere – in fast, efficient time series models used in cars, machines, conveyor systems, welding robots, and beyond. Time series data is everywhere, and it can be monetized or transformed into digital products. Pre-trained time series models are already being downloaded millions of times and are in active economic use

NXAI’s TiRex model leverages in-context learning, enabling zero-shot forecasting – accurate predictions on new datasets without the need for additional training. “This allows non-experts to use the model for forecasting and enables easy integration into existing workflows. Moreover, improvements in forecasting accuracy become particularly apparent when data availability is limited,” explains Andreas Auer, Researcher at NXAI. This opens up new digital product models: for example, machinery manufacturers can offer customers TiRex-based solutions for optimization or commissioning. Thanks to in-context learning, the model adapts automatically to the customer's data – without retraining. “The key is how well a model generalizes to unseen time series – and TiRex excels at that,” adds Hochreiter.

A decisive advantage lies in the model’s ability to continuously monitor, analyse, and update the system state – known as state tracking. Transformer-based approaches lack this capability. TiRex, on the other hand, can approximate hidden or latent states over time, improving predictive performance. Its architecture offers another major benefit: it is adaptable to hardware and enables embedded AI applications.

“We adapt TiRex to a wide range of use cases – from battery charge prediction in the automotive industry, to optimizing material flows in intralogistics, reducing setup times, inspecting weld seams, and improving quality predictions,” says Hochreiter, who also teaches at JKU Linz. xLSTM models are even being used in medicine – for example in ECG analysis and cardiac segmentation at Imperial College London. Companies are now developing their first xLSTM-based time series models together with NXAI, benefiting from chip-level optimization that allows the models to run on low-power edge devices.

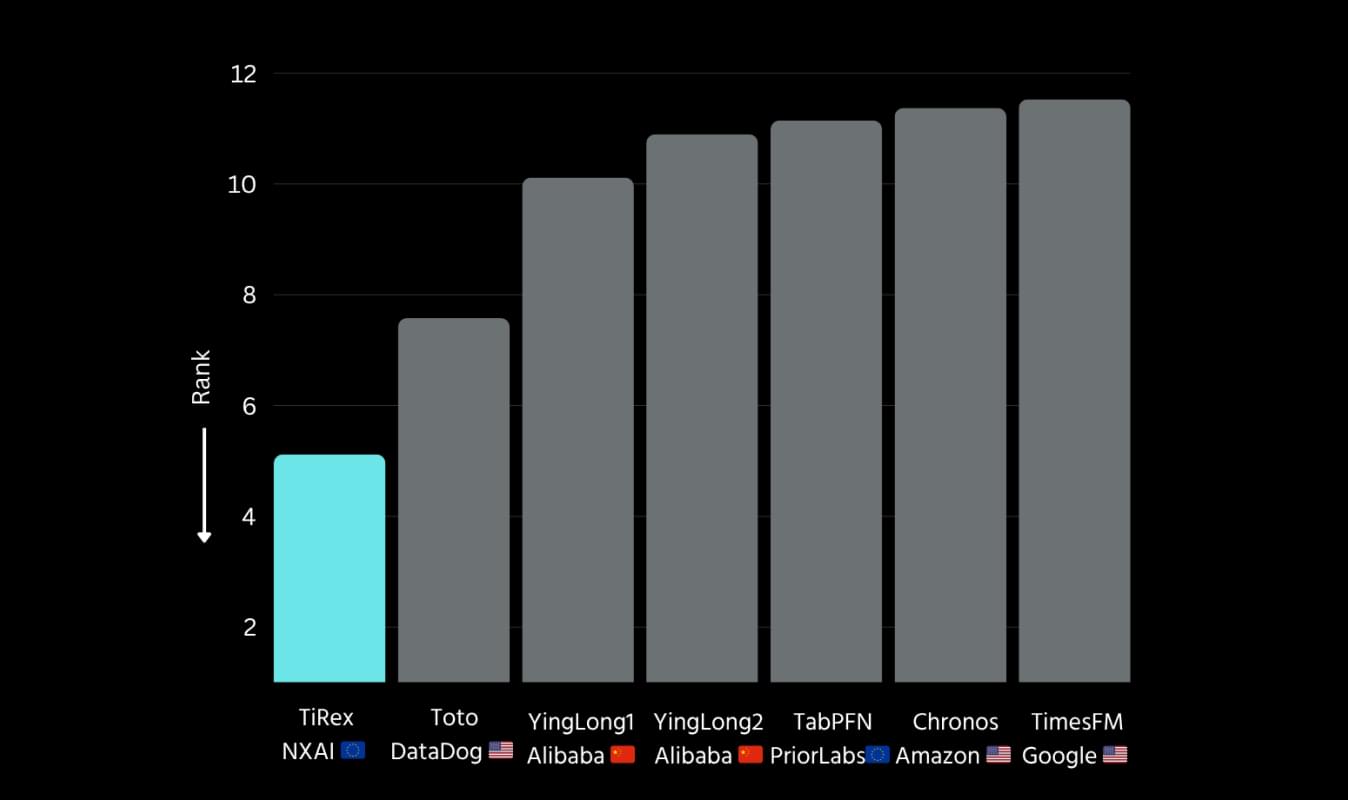

Average Rank of top models on GiftEval Leaderboard

That’s a major advantage of our xLSTM technology – we can adapt the model to a wide range of hardware. Transformer models simply can’t do that